In the automotive world, cold starts are to occur when you start the engine in a cold environment. In the serverless world, they happen when a cloud function is called without an available instance of that function. In this blog post, we quickly glance at the reason why cold starts happen and how expectations of the product team can be met.

A reason why an instance of that function might not be available could be because of an increased need for that function. This means that under a dynamic load, the number of function instances available follows that load.

In comparison to virtual machines or container solutions, new function instances are available faster and without a need to configure scaling. They scale by use, and you pay for what you use.

Why are cold starts a hot topic in the serverless world?

The answer is parallelism. By definition, a function instance can only handle one invocation in parallel. This means that when having a dynamic load, the number of function instances rises quickly to the actual need. For this, we require new instances that have never been executed before. When the load reduces again, you typically have a grace period, sometimes up to hours, during which a function that executed a previous request is available for new requests.

What happens during a cold start?

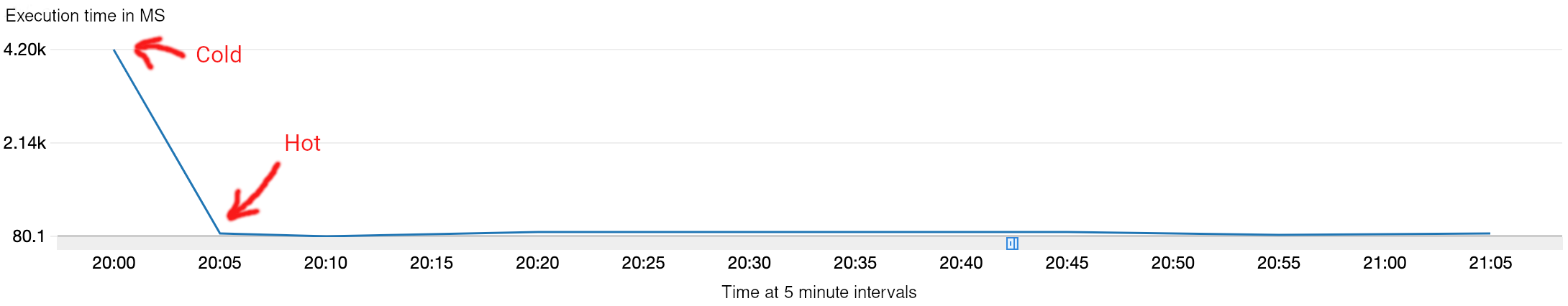

The figure shows an initial hot start of a not IO intensive function followed by one hour of a consistent load.

Two things. First, the cloud provider makes the execution environment available for a specific programming platform. Secondly, starting your function and preparing any applicable dependencies defined in its code. This immediately makes clear that you have a couple of inevitabilities and choices.

The general startup and instrumentation of the execution is something you don’t have any control over; some cloud providers are just faster and/or offer more features. The language choice is becoming less impactful.

In the past, Node.js and Python had the shortest cold start time, but other languages are being optimized as well. The dependencies used inside your function and the initialisation of them affect your cold start time as well, make sure your functions are focused on a single purpose or at least stay mindful of only rarely used dependencies.

For more info regarding language optimizations, for Java you could check out our blog on GraalVM on AWS Lambda.

How to deal with cold starts?

Cold starts are more visible in low use environments, so let’s start with that. An example of a low use environment can literally be a function that is called sparingly or a development or acceptance environment that is barely used.

You can solve this depending on cloud provider features with a provisioned capacity setting like AWS Lambda or resort to scheduled warming options. For low use and low latency requirements, you can always go back to using a container solution as well. In high use environments, it is advised to agree to percentile-based latency nonfunctional requirements with the business division—for example, a 95 or 99 percentile 300 ms response time.

In these kinds of environments, your chance increases to have a cold start with an increasing load. Still, their occurrence is dwarfed among the whole number of requests. When you have an event-based, asynchronous serverless workload, cold starts are rarely an issue.

Very few risks are involved here so this might be a good target for your first foray into a serverless solution.

We end with the stereotypical “Serverless is no silver bullet.”

Scalability yes, pay per use yes, percentile-based low latency yes, but for a consistently low latency combined with constant or low consumption, containers are your best bet. Especially when there is no scaling of containers, and only limited development resources are available to you. Are you interested in finding out what migrating to the cloud and using serverless functions can mean for your organisation?

Are you interested in finding out what migrating to the cloud and using serverless functions can mean for your organisation?

Get in touch with us